Is Your AI Hiring Tool Helping You—or Hurting You? 5 Questions to Ask Vendors Before You Buy

In 2018, news broke that Amazon had quietly scrapped an experimental AI recruiting tool.

Why?

Because it learned to downgrade resumes that included the word “women’s,” like “women’s chess club captain,” and even penalized graduates of women’s colleges. Instead of helping build a diverse workforce, the tool encoded and amplified past hiring biases.

Amazon’s misstep is far from unique. Across industries, companies are adopting AI hiring tools for resume screening, video interviews, and predictive assessments. The promise is faster, fairer, and more efficient hiring. But without scrutiny, these systems can replicate the very biases they claim to solve and expose companies to lawsuits, regulatory fines, and public backlash.

So, before you sign on the dotted line with any AI vendor, ask these five critical questions.

1. How Was Your AI Model Trained, and On What Data?

AI tools learn patterns from past data. If that data is biased, the AI will be too.

Was the model trained on data representing diverse demographics?

Does it account for gender, race, disability, age, and other factors?

Has it been tested for unintended bias?

If a vendor dismisses these questions as “proprietary,” that’s a red flag. Remember, biased training data can turn well-meaning AI into a discrimination engine.

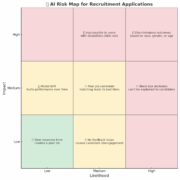

2. Do You Perform Regular Bias Audits?

Bias can creep in over time, especially as models adapt to new data or hiring trends. One audit years ago isn’t enough.

How often do you audit for bias?

Will you share those audit results?

Have you faced any regulatory investigations or lawsuits related to bias?

Vendors serious about ethical AI should be transparent and able to show compliance with laws like NYC’s Local Law 144, which mandates independent bias audits for AI hiring tools.

3. Can You Explain How Your AI Makes Decisions?

Many AI tools operate as a “black box.” That’s a problem. If a candidate is rejected, you and they deserve to know why.

Can your tool provide human-readable explanations for its decisions?

Can rejected candidates request meaningful feedback?

Emerging regulations like the EU AI Act and certain U.S. state laws increasingly demand transparency. Tools that can’t explain themselves may not comply or build trust with candidates.

4. Does Your System Comply With Relevant Laws and Regulations?

From the EEOC’s anti-discrimination rules to NYC’s audit mandates, the legal landscape for AI hiring is evolving fast.

Which laws and jurisdictions does your tool comply with?

How do you stay up to date on legal requirements?

Will you help us fulfill disclosure or audit obligations?

Vendors often advertise “ethical AI,” but it’s your company on the line if something goes wrong.

5. How Is Human Oversight Built Into the Process?

No matter how sophisticated, AI shouldn’t replace human judgment entirely.

Do you recommend human review of AI decisions?

Can your tool be tailored to fit our hiring processes?

How do you prevent recruiters from over trusting the AI?

Without human checkpoints, even well-designed tools can produce unfair outcomes and expose you to legal and reputational risks.

The Bottom Line

AI in hiring is powerful, but it’s not plug-and-play. Tools that promise efficiency and objectivity can unintentionally deepen bias and discrimination, as Amazon’s experience shows.

Before investing in any AI hiring solution, ask hard questions. Demand transparency. And remember, technology should empower your hiring process, not replace your responsibility for fairness and compliance.

Leave a Reply

Want to join the discussion?Feel free to contribute!